Chatbots are more than a dime a dozen these days – in fact I’m struggling to recall the last time I visited a site and a bot didn’t pop up to offer me some friendly, albeit generic, assistance. Now, I’ve got nothing against our little digital helpers (some might even argue that, as a UX writer, they’re my bread and butter), but their proliferation has prompted some introspection and an investigation into what it is that makes a bot good (or not).

While all bots aren’t created equal, most have evolved quite significantly over the last few years. First, we had the basic chatbot – the one we all know and love (to hate). Think menu-based, pick-a-number bots with somewhat limited options and unlimited instances of “Sorry, I didn’t get that.” Essentially, these chatbots are pre-programmed (or even scripted) to provide responses to specific inputs. If a user says X, the response will always be Y.

These chatbots rely 100% on human intelligence, as the bot itself doesn’t actually do any actual thinking on its own.

Now let’s skip ahead to where GPTs (Generative Pre-trained Transformers) and other AI models are able to carry on full conversations without any kind of human input or intervention at all. If a user asks X, the response could be Y or Z. It may even be A or B, or whatever else the model determines it to be, based on its data and specific training. Amazing, right?! Perhaps not quite…

I’ll admit that, as a writer, there was a brief moment when I was also a little worried that AI might be coming for my job. That was, until I got to spend more time with the AI fundis and began to understand how the tech actually works.

Under the hood

If we look under the hood of the “magical” content-creating, word-generating genius that is ChatGPT, like all other GenAI models, it simply operates by predicting the next piece of data in a sequence – which, for many chatbots, means the next most probable word. Exactly which word it chooses next depends on the data it was trained on – whether it’s all the information on the web up until April 2023 (in the case of GPT-4 Turbo) or a company’s own data and internal resources (think “build your own GPT”).

How a GenAI model chooses the word is nothing more than an algorithmic decision.

There’s no creativity, nor any real thinking involved – it’s simply stringing together a bunch of most probable words to create a response to what was asked (user input). It’s a bit like that time your Facebook timeline was filled with nonsensical sentences created by friends typing “Today is…” and then simply tapping the middle word on their predictive text until they felt their status would get the most LOLs.

While this can be entertaining for some, is it really what we want when it comes to our businesses? Do we honestly want a machine to not only dictate the conversation but actually determine the outcome of an interaction – especially one with a client or customer? I’d like to think not.

Reintroduce the human element

So, where does this leave AI companies that have been serving client needs for years and don't have access to the resources needed to build these new LLMs? Well, instead of jumping on the GenAI bandwagon and letting GPTs take over our clients’ bots and potentially ruin their customer experience, let's take a few steps back to reintroduce the human element that has been omitted from many of these AI bots.

Don’t get me wrong, this is not a knock against artificial intelligence at all – it could be so much more powerful when combined with the brains of the brilliant humans who have been building customer service automation for years. And that is exactly where intelligent assistants (IA) comes in.

Sure, sometimes IAs use AI, but more often than not their intelligence is a well-considered combination of human and artificial.

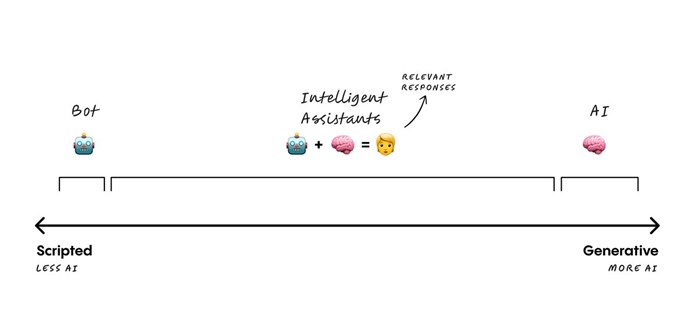

Explaining exactly what an intelligent assistant is can be quite tricky, but I’ve found that visualising it does help:

In the space between

IAs exist somewhere between a basic, scripted bot that provides a defined answer based on a set user input and an AI bot that generates results based on specific prompts. Its exact position on the spectrum – and how much or how little artificial intelligence we incorporate – will differ for each client, based on what the IA needs to achieve.

While all IAs could technically be classified as bots, not all bots possess the qualities that elevate them to intelligent assistant status, i.e. enhanced functionality, increased complexity and the ability to learn and adapt.

Since IAs are developed to solve human problems, development should always start with the people – their goals, needs, problems and pain points – and then identify the AI tools that could form part of the solution.

Then comes the tricky bit – figuring out how to integrate the tech in a way that makes sense to the end-users conversing (or interacting) with the assistant.

Carefully crafted conversations and more structured interactions greatly improve the quality and accuracy of the information being shared, resulting in fewer fallouts and a far lower potential for frustration for the end-user.

Call me proud, protective, or perhaps a little crazy, but I just don’t like the idea of my IAs causing frustration or being sworn at. And that’s why I wouldn’t dream of unleashing them on the world (or the world on them) without the proper training. Because the success of the interaction determines the quality of the customer experience.