Google has stated it will label AI-generated content as spam, lowering search traffic for sites using it. But how easy is AI-generated content to spot?

CNet, short for Computer Network, a US-based media website focusing on technology and consumer electronics globally, is one of many popular media publications that started to use AI around November last year to write numerous articles.

Study findings

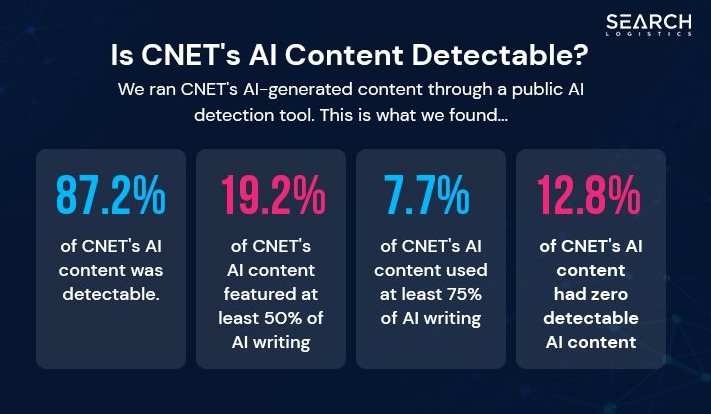

Search Logistics ran all of CNet’s AI-generated content through a public AI detection tool. Its report, AI Data Study: 87% of CNet AI Content Is Detectable By A Public Tool, found the following:

- 87.2% of CNet’s AI-generated content was detectable with a public tool. In total, 68 of the 78 articles generated by CNet’s AI were detectable by a public tool in Search Logistics’ testing.

- 19.2% of the AI-generated content on CNet used more than 50% AI, with 15 out of the 78 articles that it tested had at least 50% of their total content written by CNet’s AI engine.

- 7.7% of CNet’s AI-generated content used more than 75% of AI content. The study found that six of the 78 articles had at least 75% of their total content written by CNet’s AI engine.

- 12.8% of the AI-generated content on CNet had zero detectable AI content. The study could not detect any AI content in 10 of the 78 articles that it tested from CNet’s AI content production process.

The risk of AI-generated content

The fact that it is so easy to detect content generated by AI means there is always the risk of being labelled a spammer by Google or other entities.

Ultimately this means that using AI-generated content comes with a huge risk to a website’s traffic, email deliverability, and revenue alongside the other inherent risks of using AI content such as its accuracy.

An experiment

The fact that the articles were AI-generated was picked up by The Byte.

"CNet started publishing articles under the by-line CNet Money Staff for a number of financial explainer articles," it states.

In response to the article on The Byte, CNet stated that it was an experiment.

"There's been a lot of talk about AI engines and how they may or may not be used in newsrooms, newsletters, marketing and other information-based services in the coming months and years. Conversations about ChatGPT and other automated technology have raised many important questions about how the information will be created and shared and whether the quality of the stories will prove useful to audiences.

"We decided to do an experiment to answer that question for ourselves."

The publication adds that it has built its reputation by testing new technologies and separating the hype from reality, from voice assistants to augmented reality to the metaverse.

"In November, our CNet Money editorial team started trying out the tech to see if there's a pragmatic use case for an AI assist on basic explainers around financial services topics like What Is Compound Interest? and How to Cash a Check Without a Bank Account. So far we've published about 75 such articles."

It adds that the goal of the experiment is to help its busy editors and staff.

Very dumb errors

But the experiment has back-fired says The Washington Post, as staff had to spend time doing some "lengthy correction notices to some of its AI-generated articles after Futurism, another tech site, called out the stories for containing some “very dumb errors".”

It quotes an automated article about the compound interest that incorrectly said a $10,000 deposit bearing three percent interest would earn $10,300 after the first year. "Nope. Such a deposit would actually earn just $300."

This is despite a dropdown on CNet's articles that reads: "This article was generated using automation technology" and was "thoroughly edited and fact-checked by an editor on our editorial staff".

Further to that CNet and sister publication Bankrate have new notices appended to several other pieces of AI-generated work that state: “we are currently reviewing this story for accuracy,” and that “if we find errors, we will update and issue corrections".