Top stories

Marketing & MediaAI changed how I work as a designer, faster than I expected

Emmanuel Naidoo, Ignition Group 10 hours

More news

ESG & Sustainability

Can Ramaphosa’s crisis committee solve South Africa’s water woes?

So, how does this innovation impact the prevalence of misinformation? At this stage, it may be too early to tell, but it’s important to consider the potential implications, especially when it comes to receiving trusted medical advice and diagnosis.

People using the internet for self-diagnosis and treatment is nothing new. For many years millions have turned to ‘Dr. Google’ for healthcare inquiries, making it the main source for both helpful and harmful information. But now instead of browsing multiple pages to seek out an answer to their questions, users can turn to a single (seemingly) authoritative voice: ChatGPT.

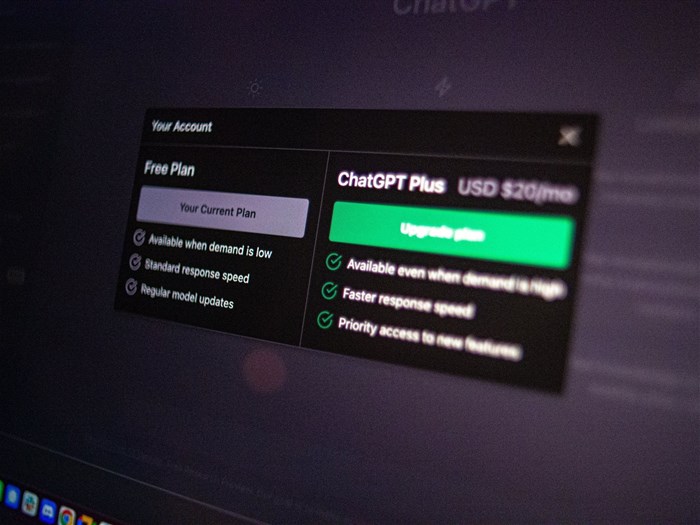

Using an AI model, ChatGPT has been ‘trained’ to recognise patterns in language that allows it to make ‘predictions’ based on that learning. The result of this is a tool that draws on available information to produce well-articulated answers to almost any question entered into its chat bar, no matter how broad or narrow the question or how detailed the expected response.

But how reliable is the information? What happens when it comes to subject matter that may contain conflicting opinions or biased sources of information? Here it becomes hard to discern the chatbot’s reliability or potential prejudices.

On its homepage, ChatGPT includes disclaimers that it may generate incorrect or biased information. Yet, it is unknown when a response is correct or not, how it may have been influenced by the manner in which a question was phrased and when and how it may be influenced by vested interests — and when it comes to health advice this could amplify certain falsehoods or points of view, or overlook potential blind spots of information. Unlike a Google search that leads you to specific websites, with ChatGPT there is no single source of information which makes it hard to ascertain its credibility.

For now, ChatGPT is being used through the OpenAI portal. But what happens when third parties such as healthcare providers incorporate ChatGPT or other chatbots into their sites to augment their service? Like the introduction of any medical technology, this will require regulation and possibly also legislation.

In the United States, this sort of regulation falls under the purview of the FDA. They are responsible for registering all new healthcare devices or forms of medication. But will they be able to regulate the likes of ChatGPT in healthcare?

This raises the larger question around accountability. Let’s say a healthcare institution begins using an AI-registered platform, incorporating it into the diagnostic process, and a claim arises. Who answers to any potential liability - the AI itself, the company that developed the programme, the programmers who developed and maintain the algorithm or the healthcare institution that adopted the platform and failed to manage associated risks? Only time will tell.

Chatbots, like other AI technology, will only become more present in our lives. If designed and used responsibly, they can transform patient care by providing patients with easily accessible personalised healthcare information, conveniently anywhere anytime. Other than being of particular use for those in remote areas, it provides opportunities to reduce the burden on healthcare systems by supporting patients in the self-diagnosis and -management of minor health conditions. The interactive nature of chatbots allows patients to take a more active role in managing their health, with advice tailored to their specific needs.

However, all of this comes with significant risks, with a key concern being the amplified spread of misinformation. If the data chatbots rely on is inaccurate and biased, or if the algorithm favours some information more than others, the output may be incorrect and indeed dangerous.

With great power comes great responsibility. To ensure that this technology is used safely and effectively, data sources need careful selection, outputs must be monitored and validated on an ongoing basis and users educated on chatbot limitations. It is critical that we remain vigilant and advocate for the the safe and effective use of this technology.

As the renowned surgeon and writer Dr Atul Gawande has stated; "The foundation of medicine is built on the trust between a patient and their physician. Without trust, there can be no effective treatment, no genuine healing, and no meaningful progress towards better health." In a world with Dr. Chatbot, this is no less true.